FAQ About The Role of Westerns in Film and Cultural Identity

What is the definition of a Western film?

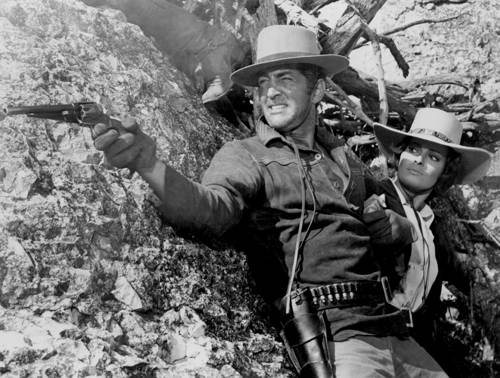

A Western film is a genre of movie that is set primarily in the latter half of the 19th century in the American Old West. It often revolves around cowboys, outlaws, and lawmen, exploring themes such as frontier justice, survival, and conflict between civilization and wilderness. Westerns are known for iconic settings like deserts and frontier towns and often feature adventures, gunfights, and horseback chases.