FAQ About The Role of Westerns in Film and Cultural Identity

How have Western films influenced international perceptions of America?

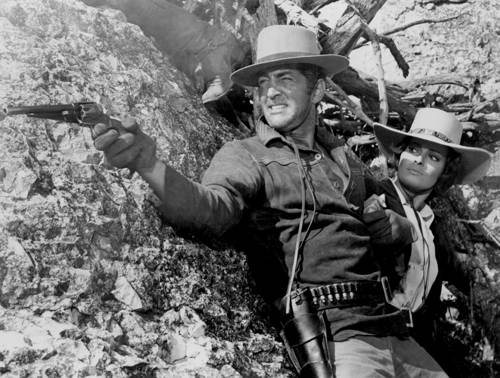

Western films have significantly influenced international perceptions of America by projecting an image of the rugged, adventurous spirit emblematic of the American frontier. This image has been embraced and critiqued across the globe, shaping views on American history, character, and values. The heroic cowboy image, for instance, often colors international understandings of American self-reliance and expansionist history.